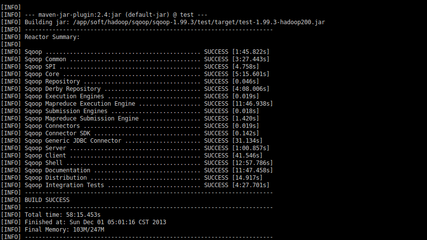

已经装好的hadoop环境是hadoop 2.2.0

下载的sqoop安装包(注意是hadoop200)

http://www.us.apache.org/dist/sqoop/1.99.3/sqoop-1.99.3-bin-hadoop200.tar.gz

2.解压文件到工作目录:

hadoop@hadoopMaster:$ sudo tar -xvf /opt/hn/hadoop_family/sqoop-1.99.3-bin-hadoop200.tar.gz

hadoop@hadoopMaster:mv /opt/hn/hadoop_family/sqoop-1.99.3-bin-hadoop200 /usr/local/sqoop

3.修改环境变量:

hadoop@hadoopMaster:~$ vim /etc/profile

添加如下内容:

#sqoop

export SQOOP_HOME=/usr/local/sqoop

export PATH=$SQOOP_HOME/bin:$PATH

export CATALINA_HOME=$SQOOP_HOME/server

export LOGDIR=$SQOOP_HOME/logs

保存退出即时生效:

source /etc/profile

4.修改sqoop配置:

hadoop@hadoopMaster:~$ vim /usr/local/sqoop/server/conf/sqoop.properties

#修改指向我的hadoop安装目录

org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/usr/local/hadoop/

#把hadoop目录下的jar包都引进来

hadoop@hadoopMaster:~$ vim /usr/local/sqoop/server/conf/catalina.properties

common.loader=/usr/local/hadoop/share/hadoop/common/*.jar,/usr/local/hadoop/share/hadoop/common/lib/*.jar,/usr/local/hadoop/share/hadoop/hdfs/*.jar,/usr/local/hadoop/share/hadoop/hdfs/lib/*.jar,/usr/local/hadoop/share/hadoop/mapreduce/*.jar,/usr/local/hadoop/share/hadoop/mapreduce/lib/*.jar,/usr/local/hadoop/share/hadoop/tools/*.jar,/usr/local/hadoop/share/hadoop/tools/lib/*.jar,/usr/local/hadoop/share/hadoop/yarn/*.jar,/usr/local/hadoop/share/hadoop/yarn/lib/*.jar,/usr/local/hadoop/share/hadoop/httpfs/tomcat/lib/*.jar

5.下载mysql驱动包

mysql-connector-java-5.1.16-bin.jar

6.启动/停止sqoop200

hadoop@hadoopMaster:/usr/local/sqoop/bin$ ./sqoop.sh server start/stop

查看启动日志:

hadoop@hadoopMaster:/usr/local/sqoop/server/logs$ vim catalina.out

7.进入客户端交互目录

hadoop@hadoopMaster:/usr/local/sqoop/bin$ ./sqoop.sh client

+------------------------------------------+

|Sqoop home directory: /usr/local/sqoop |

|Sqoop Shell: Type 'help' or 'h' for help.|

|sqoop:000> |

+------------------------------------------+

为客户端配置服务器:

+---------------------------------------------------------------------+

|sqoop:000> set server --host hadoopMaster --port 12000 --webapp sqoop|

|Server is set successfully |

+---------------------------------------------------------------------+

查版本信息:

+-----------------------------------------------------------------+

|sqoop:000> show version --all |

|client version: |

| Sqoop 1.99.3 revision 2404393160301df16a94716a3034e31b03e27b0b |

| Compiled by mengweid on Fri Oct 18 14:15:53 EDT 2013 |

|server version: |

| Sqoop 1.99.3 revision 2404393160301df16a94716a3034e31b03e27b0b |

| Compiled by mengweid on Fri Oct 18 14:15:53 EDT 2013 |

|Protocol version: |

| [1] |

+-----------------------------------------------------------------+

显示连接器:

+---------------------------------------------------------------------------------------------+

|sqoop:000> show connector --all |

|1 connector(s) to show: |

|Connector with id 1: |

| Name: generic-jdbc-connector |

| Class: org.apache.sqoop.connector.jdbc.GenericJdbcConnector |

| Version: 1.99.3 |

| Supported job types: [IMPORT, EXPORT] |

| Connection form 1: |

| Name: connection |

| Label: Connection configuration |

| Help: You must supply the information requested in order to create a connection object.|

| Input 1: |

| . |

| . |

| . |

| 太长了,就拷贝这一点 |

+---------------------------------------------------------------------------------------------+

创建数据库连接:

+---------------------------------------------------------------------------------------------+

|sqoop:000> create connection --cid 1 |

|Creating connection for connector with id 1 |

|Please fill following values to create new connection object |

|Name: My first |

| |

|Connection configuration |

| |

|JDBC Driver Class: com.mysql.jdbc.Driver |

|JDBC Connection String: jdbc:mysql://localhost:3306/sqoop_stu |

|Username: root |

|Password: ********** |

|JDBC Connection Properties: |

|There are currently 0 values in the map: |

|entry# |

| |

|Security related configuration options |

| |

|Max connections: 100 |

|New connection was successfully created with validation status FINE and persistent id 1 |

+---------------------------------------------------------------------------------------------+

创建导入任务

+------------------------------------------------------------------------------------+

|sqoop:001> create job --xid 1 --type import |

|Creating job for connection with id 1 |

|Please fill following values to create new job object |

|Name: First job |

| |

|Database configuration |

| |

|Schema name: traceweb |

|Table name: trace_web_application |

|Table SQL statement: |

|Table column names: |

|Partition column name: |

|Nulls in partition column: |

|Boundary query: |

| |

|Output configuration |

| |

|Storage type: |

| 0 : HDFS |

|Choose: 0 |

|Output format: |

| 0 : TEXT_FILE |

| 1 : SEQUENCE_FILE |

|Choose: 1 |

|Compression format: |

| 0 : NONE |

| 1 : DEFAULT |

| 2 : DEFLATE |

| 3 : GZIP |

| 4 : BZIP2 |

| 5 : LZO |

| 6 : LZ4 |

| 7 : SNAPPY |

|Choose: 0 |

|Output directory: /opt/sqoop_output |

| |

|Throttling resources |

| |

|Extractors: |

|Loaders: |

|New job was successfully created with validation status FINE and persistent id 1 |

+------------------------------------------------------------------------------------+

启动job:

+------------------------------------------------

|sqoop:000> start job --jid 1

+------------------------------------------------

查看导入状态:

+------------------------------------------------

|sqoop:000> status job --jid 1

|Submission details

|Job ID: 1

|Server URL: http://hadoopMaster:12000/sqoop/

|Created by: hadoop

|Creation date: 2014-05-23 18:51:05 CST

|Lastly updated by: hadoop

|External ID: job_local1566994033_0001

| http://localhost:8080/

|2014-05-23 18:51:35 CST: UNKNOWN

+------------------------------------------------

查看输出目录:

+--------------------------------------------------------------------+

hadoop@hadoopMaster:~$ l /opt/sqoop_output/

总用量 92

drwxrwxr-x 2 hadoop hadoop 4096 5月 23 18:52 .

drwxr-xr-x 8 hadoop hadoop 4096 5月 23 18:51 ..

-rw-r--r-- 1 hadoop hadoop 209 5月 23 18:51 part-m-00000.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00000.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00001.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00001.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00002.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00002.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00003.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00003.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00004.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00004.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00005.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00005.seq.crc

-rw-r--r-- 1 hadoop hadoop 207 5月 23 18:51 part-m-00006.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00006.seq.crc

-rw-r--r-- 1 hadoop hadoop 86 5月 23 18:51 part-m-00007.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00007.seq.crc

-rw-r--r-- 1 hadoop hadoop 206 5月 23 18:51 part-m-00008.seq

-rw-rw-r-- 1 hadoop hadoop 12 5月 23 18:51 .part-m-00008.seq.crc

-rw-r--r-- 1 hadoop hadoop 682 5月 23 18:51 part-m-00009.seq

-rw-rw-r-- 1 hadoop hadoop 16 5月 23 18:51 .part-m-00009.seq.crc

-rw-r--r-- 1 hadoop hadoop 0 5月 23 18:51 _SUCCESS

-rw-rw-r-- 1 hadoop hadoop 8 5月 23 18:51 ._SUCCESS.crc

+--------------------------------------------------------------------

sqoop:000> show job

+----+------------+--------+-----------+---------+

| Id | Name | Type | Connector | Enabled |

+----+------------+--------+-----------+---------+

| 1 | First job | IMPORT | 1 | true |

| 2 | importHDFS | IMPORT | 1 | true |

+----+------------+--------+-----------+---------+

sqoop:000> delete job --jid 1

sqoop:000> show job

+----+------------+--------+-----------+---------+

| Id | Name | Type | Connector | Enabled |

+----+------------+--------+-----------+---------+

| 2 | importHDFS | IMPORT | 1 | true |

+----+------------+--------+-----------+---------+

sqoop:000> delete job --jid 2

sqoop:000> show job

+----+------+------+-----------+---------+

| Id | Name | Type | Connector | Enabled |

+----+------+------+-----------+---------+

+----+------+------+-----------+---------+

sqoop:000> show connection

批处理模式:

sqoop.sh client /opt/sqoop/script.sqoop

hadoop@hadoopMaster:$ vim /opt/sqoop/script.sqoop

#指定服务器信息

set server --host hadoopMaster --port 12000 --webapp sqoop

#执行JOB

start job --jid 1

+--------------------------------------------------------------------+

hadoop@hadoopMaster:/usr/local/sqoop/bin$ ./sqoop.sh client /opt/hadoop/mysql/batchModel.sqoop

Sqoop home directory: /usr/local/sqoop

sqoop:000> set server --host hadoopMaster --port 12000 --webapp sqoop

Server is set successfully

sqoop:000> start job --jid 1

Submission details

Job ID: 1

Server URL: http://hadoopMaster:12000/sqoop/

Created by: hadoop

Creation date: 2014-05-30 10:55:10 CST

Lastly updated by: hadoop

External ID: job_local945860799_0003

http://localhost:8080/

2014-05-30 10:55:10 CST: BOOTING - Progress is not available

+--------------------------------------------------------------------+

https://cwiki.apache.org/confluence/display/SQOOP/Sqoop2+Quickstart#Sqoop2Quickstart-Fullimportdemo

================================MYSQL=======================================

hadoop@hadoopMaster:~$ mysql -uroot -pjava

mysql> create database sqoop_stu;

Query OK, 1 row affected (0.03 sec)

mysql> use sqoop_stu;

Database changed

mysql> create table student(id int(3) auto_increment not null primary key, name char(10) not null, address varchar(50));

Query OK, 0 rows affected (0.41 sec)

mysql> insert into student values(1, 'Tom','beijing'),(2, 'Joan','shanghai'), (3, 'Wang', 'shenzheng');

Query OK, 3 rows affected (0.07 sec)

Records: 3 Duplicates: 0 Warnings: 0

CREATE TABLE `demo_blog` (`id` int(11) NOT NULL AUTO_INCREMENT, `blog` varchar(100) NOT NULL, PRIMARY KEY (`id`)) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE `demo_log` (`operator` varchar(16) NOT NULL, `log` varchar(100) NOT NULL) ENGINE=MyISAM DEFAULT CHARSET=utf8;

https://hbase.apache.org/book/configuration.html#hadoop

http://www.tuicool.com/articles/NVfEVnn

爱华网

爱华网